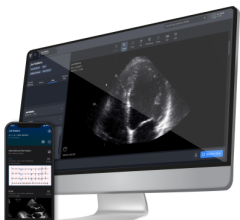

November 19, 2018 – Medical artificial intelligence (AI) company Bay Labs and Northwestern Medicine announced that the first patient has been enrolled in a first-of-its-kind study. The study will evaluate the use of Bay Labs’ EchoGPS cardiac ultrasound guidance software to enable certified medical assistants (CMAs) as medical professionals with no prior scanning experience to capture high-quality echocardiograms. The study will also evaluate the use of its EchoMD measurement and interpretation software suite to detect certain types of heart disease among patients 65 years and older undergoing routine physical examinations in primary care settings.

“Deep learning will have a profound impact on cardiac imaging in the future, and the ability to simplify acquisition will be a tremendous advance to bring echocardiograms to the point-of-care in primary care offices,” said Patrick M. McCarthy, M.D., chief of cardiac surgery at Northwestern Memorial Hospital, executive director, Northwestern Medicine Bluhm Cardiovascular Institute and principal investigator on the project.

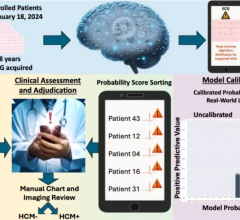

“SHAPE: Seeing the Heart with AI Powered Echo” is the first study to evaluate AI-guided ultrasound acquisition by CMAs. SHAPE is a non-randomized study which will enroll approximately 1,200 patients at Northwestern Medicine sites, including Northwestern Medicine Central DuPage Hospital in Winfield, Ill., and Northwestern Medicine Regional Medical Group primary care clinics. The primary objective of the study is to determine whether CMAs can use the Bay Labs EchoGPS to obtain diagnostic-quality echocardiograms, and if those images reviewed by cardiologists with the assistance of the EchoMD software suite will enable detection of more patients with cardiac disease in a primary care setting compared to standard physical examination with an electrocardiogram (ECG).

The SHAPE study is a part of Bay Labs’ ongoing partnership with Northwestern Medicine to explore new ways to apply AI to clinical cardiovascular care and fits into Northwestern’s larger AI initiative, which focuses on harnessing the power of AI to advance the study and treatment of cardiovascular disease. The AI initiative is funded, in part, by a $25 million gift from the Bluhm Family Charitable Foundation, formed by Neil G. Bluhm, a prominent Chicago philanthropist and real estate developer. For more details on the SHAPE study, including enrollment information, please visit Clinicaltrials.gov identifier #NCT03705650.

Bay Labs’ EchoGPS is investigational software integrated into an ultrasound system. EchoGPS uses AI to aid in the acquisition of echocardiograms by providing non-specialist users real-time guidance to obtain cardiac views. The EchoMD software suite assists cardiologists in automated review of images captured. Bay Labs received U.S. Food and Drug Administration (FDA) clearance for its first release of EchoMD in June 2018, which included AutoEF software that fully automates clip selection and calculation of left ventricular ejection fraction (EF), the leading measurement of cardiac function.

For more information: www.baylabs.io

September 24, 2025

September 24, 2025